GPU-accelerated Generation of Heat-Map Textures for Dynamic 3D Scenes: Visualizing the Distribution of Visual Attention in Real-Time

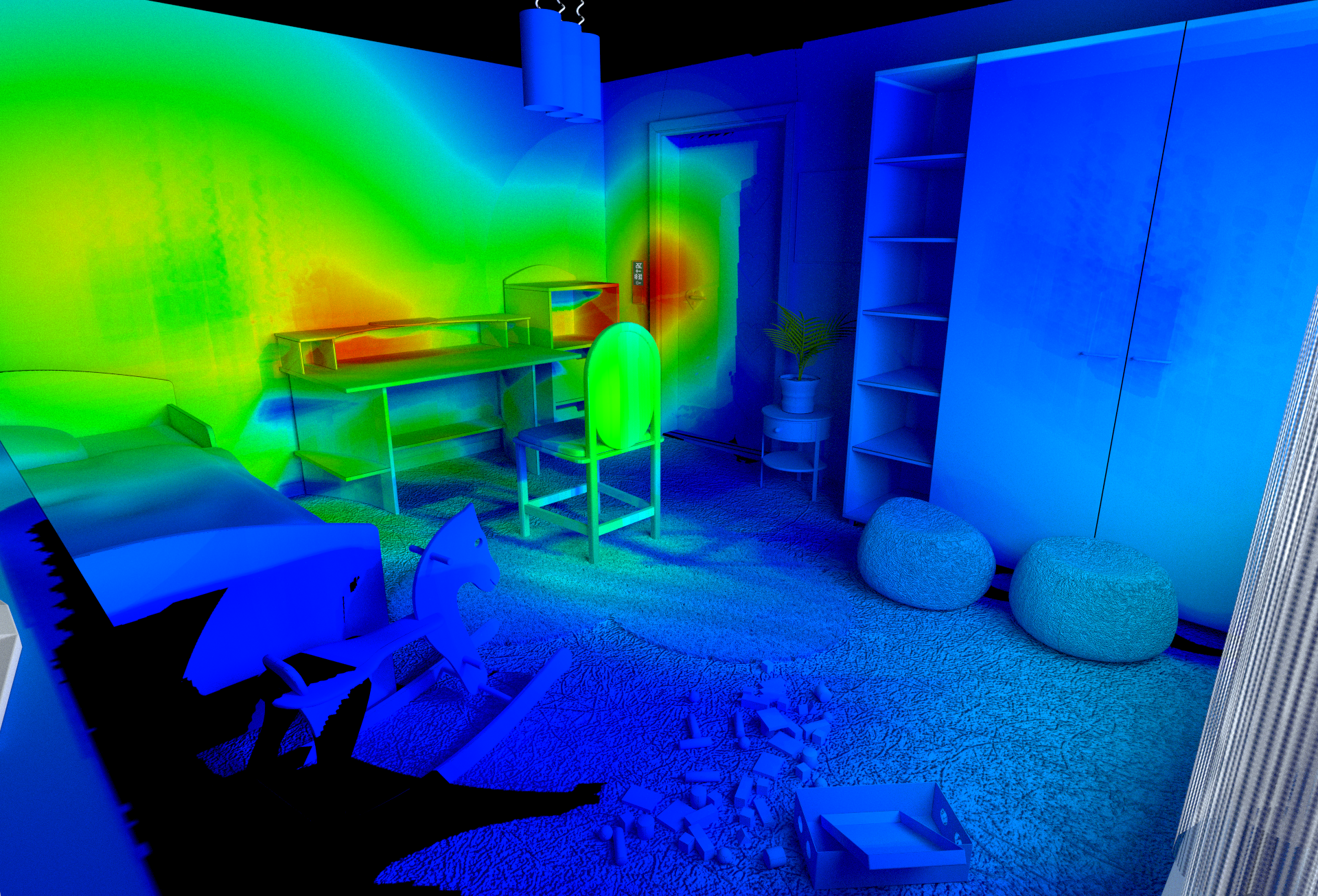

There are many applications for the visualization of 3D distributions (volumetric data) over 3D environments. Examples are the mapping of acoustic data (e.g. noise level), traffic data (e.g. on streets or in shops) or visual saliency as well as visual attention maps for the analysis of human behavior. We developed a GPU-accelerated approach which allows for real-time representation of 3D distributions in object-based textures. These distributions can then be visualized as heat maps that are visually overlaid on the original scene geometry using multi-texturing. Applications of this approach are demonstrated in the context of visualizing live data on visual attention as measured by a 3D eye-tracking system. The presented approach is unique in that it works with monocular and binocular data, respects the depth of focus, can handle moving objects and outputs a set of persistent heat map textures for creating high-end photo-realistic renderings.

Demonstration Video

On our website you will find further information about eye tracking in 3D worlds.

The algorithm in a nutshell

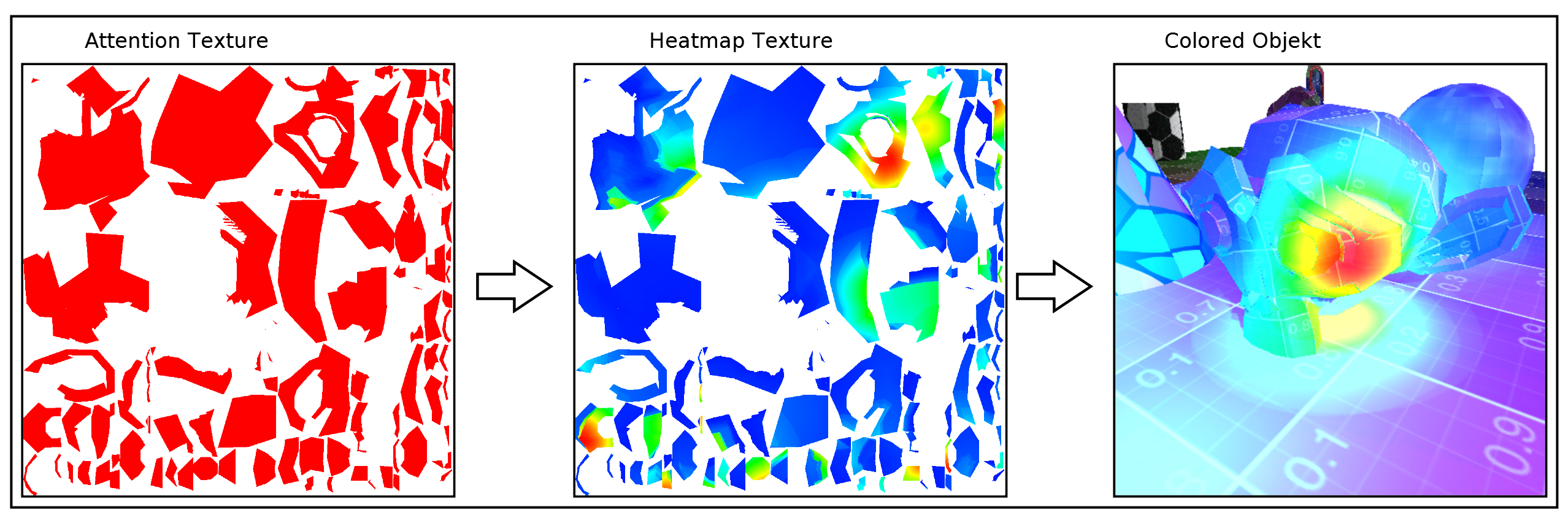

The basic principle behind our approach is a specific texture workflow. Every model holds an Attention Texture that stores it's attention values. Thereby every mesh region that is covered with texture coordinates will automatically be able to store attention values in the analog regions of the Attention Texture.

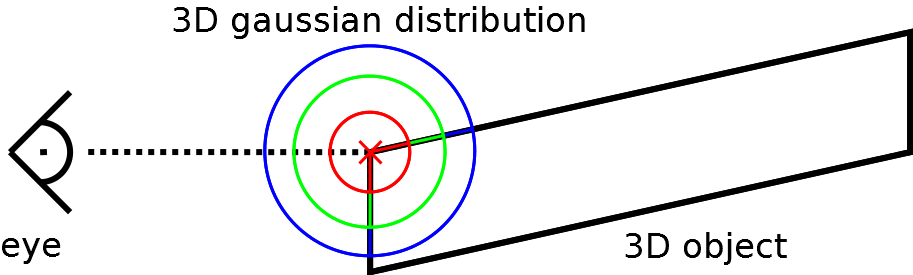

Applying the attention values to the Attention Textures is the most demanding task. This is performed in a shader that computes and writes the added attention value for each pixel of the texture. First we identify the 3D point of regard in the scene. By computing the 3D distance towards this point we can:

- Limit colorization to a certain distance from the point.

- Adjust the added attention value in relation to the distance.

To support occlusions between objects we added an intermediate step where shadow maps are computed for the defined eyes. Therefore each pixel inside the Attention Textures can look up if it's occluded or visible before adding attention values. However due to a basic shadow mapping approach our example demo suffers from typical shadow mapping issues.

Displaying the colorized scene during projection of attention data would usually result in doubled computational costs. This is due to the global attention maximum that has to be determined for normalization, enforcing a second iteration over all Attention Textures to update them to the normalized values. To solve this issue we use one additional texture, called Max Attention Texture, which is bound simultaneously while updating each of the Attention Textures. Whenever the attention value of an updated pixel on the Attention Texture is higher than the value of the same pixel in the Max Attention Texture, we will also update this new local maximum there. The Max Attention Texture will therefore always contain the global attention maximum. With this technique and Parallel Reductions the global attention maximum can be computed more efficiently.

Further Reading: IEEE VR2015 Poster

Software

Here you can download our software demo:- Program: AttentionVisualizer 1.0

- Manual (strongly advised): Readme

- Blender files for exported texture viewing: BMW Blender File

Involved People

| Postdoctoral Researcher | Dr. Thies Pfeiffer |

| Student Researchers |

Cem Memili |